Luminar Technologies and Applied Intuition are partnering to provide the first validated sensor models of Luminar lidars for advanced driver-assistance systems (ADAS) and automated driving (AD) simulation. This partnership aims to offer automakers access to high-fidelity lidar data for inclusion in their simulations. Automakers will be able to use Applied Intuition’s sensor simulator Sensor Sim to validate their lidar-based ADAS or AD software in virtual environments while reducing the need for expensive real-world tests.

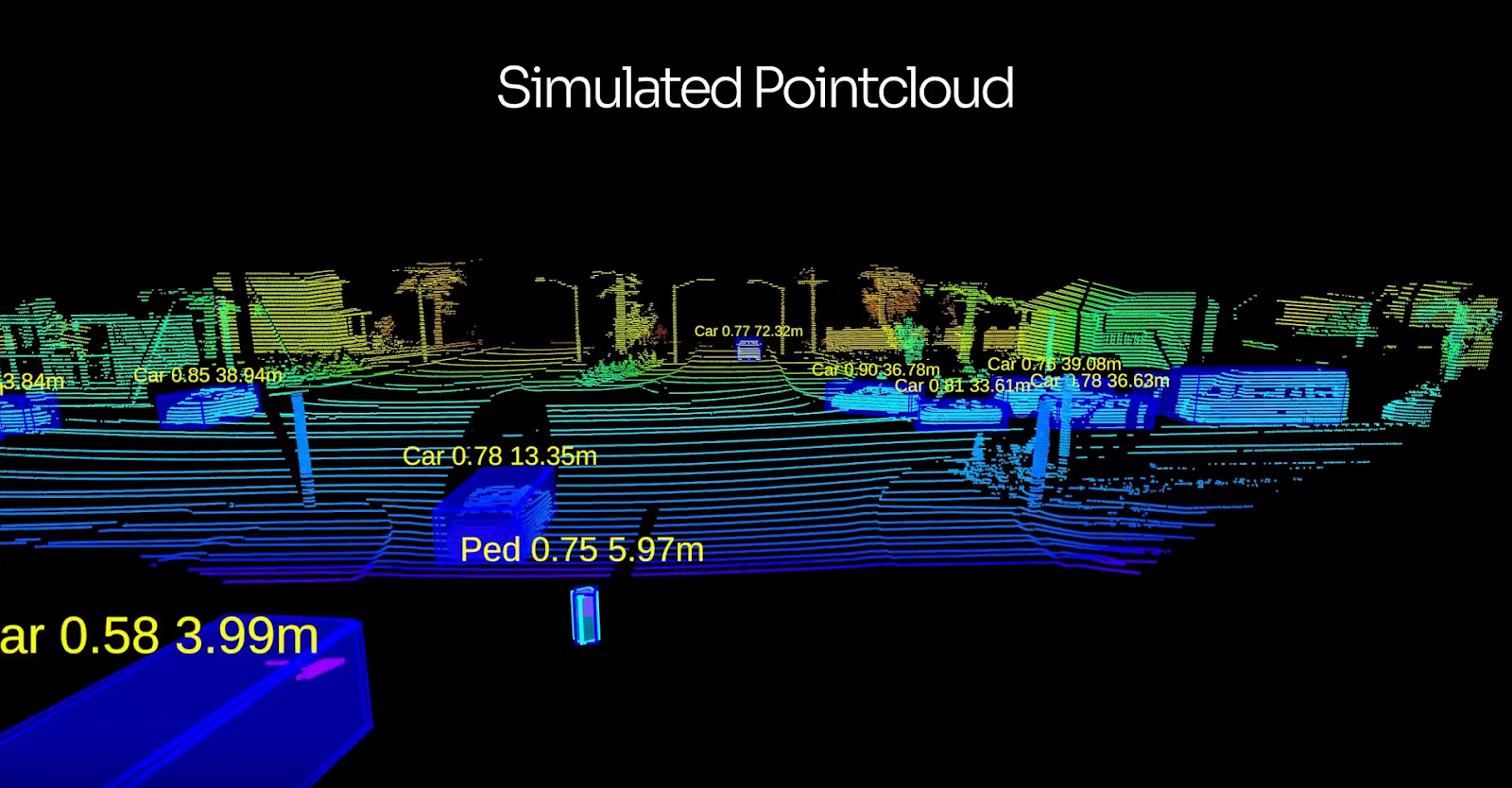

Sensor Sim enables automakers to create and simulate edge case scenarios with perception and planning systems in the loop. Integrated Luminar lidar models and real-time point cloud generation accurately capture lidar behaviors that match the sensor hardware in development vehicles.

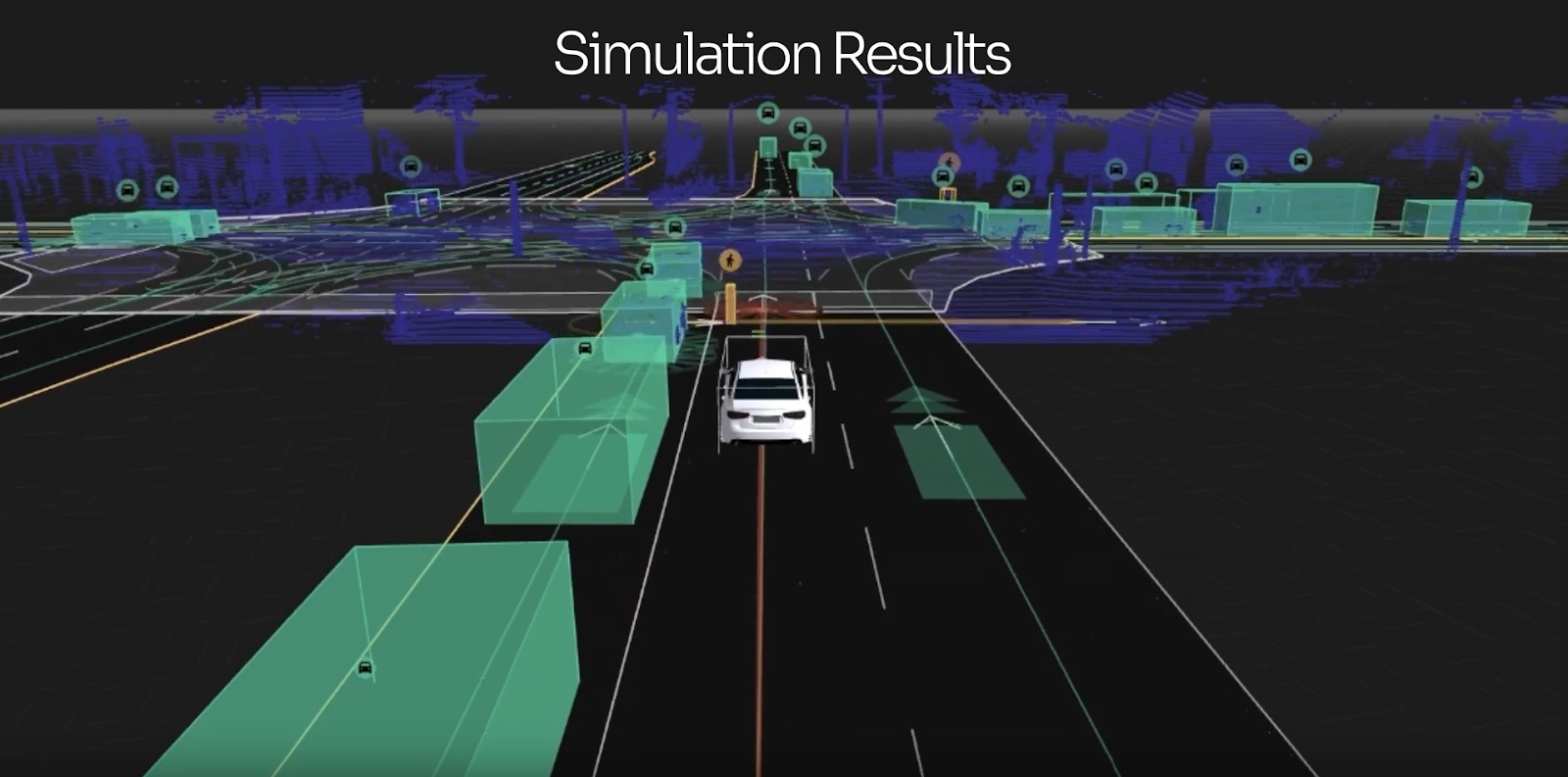

Luminar uses Applied Intuition’s simulation tools to develop its ADAS stack, Sentinel. Sentinel provides a fully redundant, AI- and geometric-based safety solution that can dramatically reduce vehicle accidents and injuries. With the integration of Sentinel and Object Sim, Luminar can now run the full Sentinel pipeline within the virtual environment, controlling simulated vehicles in the same manner as real-world vehicles, including acceleration, steering, and braking. Through closed-loop simulation, Luminar can easily develop against and validate the edge cases of real-world driving scenarios that are challenging for today’s perception systems and active safety functions.

Iterative Development

For Luminar’s software team, development and testing have always gone hand in hand, employing industry-best methods to ensure no line of code goes untested. For higher-level software integration tests, Luminar has consistently tested all levels of algorithms in their own fleet of development vehicles. However, conducting full-stack testing in simulation scales better than in vehicles, which must be retrofitted with drive-by-wire systems, compute capability, and sensors. With Sensor Sim’s high level of accuracy, Luminar is now able to simulate its lidar sensor’s output and run the full Sentinel stack in a simulated environment. This results in a level of point cloud, perception, and actuation fidelity that rivals running the software stack in an actual development vehicle.

Conducting full-stack testing in Applied Intuition’s simulation tools allows many more Luminar developers to concurrently test portions of their software on a full-system level, as they do not require a test vehicle. Simulation tests also provide 100% repeatability, allowing edge cases to be isolated, tested, and retested with incorporated lessons learned. With these simulation capabilities, developers and Luminar’s continuous integration (CI) team can run large-scale experiments asynchronously across many scenario variations.

Reduced Time to Market

Production-grade automotive hardware and software require extensive testing campaigns to validate performance on hundreds of thousands of operational hours. This statistically significant test coverage is traditionally extremely time-consuming and expensive to attain. The collaboration between Applied Intuition and Luminar significantly accelerates development time by moving large parts of the required data collection from the physical vehicle fleet to a virtual one, which can be parallelized to run many scenarios concurrently.

How the Luminar Lidar Navigates a Challenging Simulation Scenario

Many of today’s automatic emergency braking (AEB) systems can handle clearly visible pedestrians and vehicles but fail when put to the test with more challenging scenarios around visibility and timing. The video below shows a simulated sample scenario of a small child walking onto the street in a low-visibility environment. The vehicle must instantly identify the collision threat and apply the brakes. In this example, the Sentinel software package correctly identifies and tracks the pedestrian, evaluates the collision probability, calculates a trajectory to avoid it, and then applies the brakes to avoid a collision.

The end-to-end simulation of challenging scenarios like this one tests the detection capabilities of sensors and software to their limits. These kinds of tests are necessary to improve ADAS and AD real-world performance faster and at higher quality, ultimately realizing safety benefits for all.

.webp)

.webp)